The artificial intelligence(AI) revolution is driving unprecedented demand for two key resources: electricity and specialised semiconductors. Technology giants and financial markets paint a picture of almost unlimited growth, but beneath the surface of this narrative lies a fundamental conflict. On the one hand, we are faced with exponentially increasing projections of energy demand from data centres. On the other, we face hard physical and geopolitical constraints on the global capacity to produce the advanced chips needed to power this revolution. These two trends, inextricably linked, seem to be hurtling along a collision course.

A landmark report published by London Economics International (LEI) was the first to quantify this contradiction. LEI’s analysis exposes that projections of energy demand in the US alone, when translated into chip demand, become impossible in the context of realistic global supply scenarios.

AI gold rush: a tsunami of energy demand

The current technological era is dominated by a phenomenon that can aptly be described as the ‘AI gold rush’. It is driving investment on an unprecedented scale, which can be seen most clearly in the rapid growth of the data centre market – the physical infrastructure that is the backbone of artificial intelligence. The global data centre market is expected to grow from around USD 347.6 billion in 2024 to more than USD 652 billion by 2030, driven by growing demand for cloud services, Internet of Things (IoT) technologies and, above all, AI applications.

Artificial intelligence, particularly its generative variety, is a major catalyst for the exponential increase in energy demand. Training large AI models is an extremely energy-intensive process, and their subsequent use (inference) also consumes significantly more energy than traditional computational tasks. It is estimated that a single query to a model such as ChatGPT uses around 10 times more energy than a traditional Google search. Furthermore, dedicated AI accelerators such as graphics processing units (GPUs) consume significantly more energy than traditional processors (CPUs).

The scale of this energy tsunami is reflected in forecasts from leading institutions. The International Energy Agency (IEA) warns that global data centre power consumption could double by 2026. Goldman Sachs estimates that by 2030, global power demand from data centres will increase by 165% compared to 2023, and that AI alone will increase from 14% to 27% of this demand as early as 2027. In the US, the projections are even more dramatic – by 2030, data centres could account for 9% to 12% of total national energy consumption, compared to around 4.4% in 2023. Behind this boom are mainly the so-called hyperscalers – Amazon, Google, Microsoft and Meta – who plan to spend USD 217 billion on AI infrastructure in 2024 alone.

The great contradiction: the mathematics of the impossible

In the face of such astronomical forecasts, the LEI report is a sobering voice of reason. Its authors cast doubt on the reliability of forecasts of energy needs, exposing the fundamental contradiction between declared demand and the physical capacity to meet it.

The report’s key argument is simple but striking: energy demand forecasts for data centres in the US are systematically overestimated and consequently unreliable. To prove this, LEI used an innovative methodology, comparing aggregate energy demand forecasts with the physical global capacity to produce a key component – advanced AI chips.

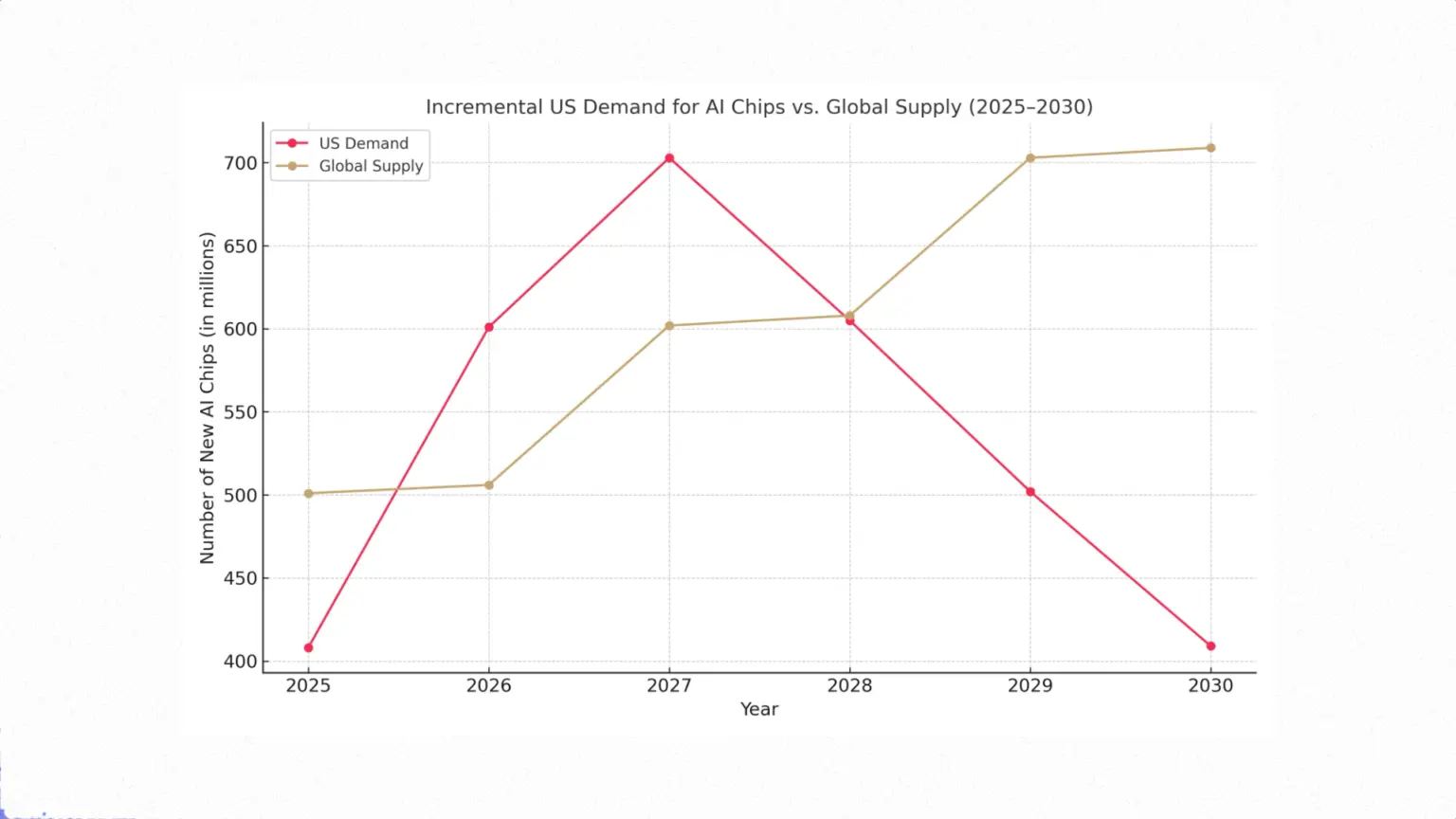

The results of this analysis are clear. LEI aggregated power demand forecasts from US grid operators, covering 77% of the US power market. These showed that an additional 57 GW of power demand would be created between 2025 and 2030. Subsequently, the analysts estimated that, even with very optimistic assumptions about the growth of global AI chip production, the total new supply over this period would be able to meet a demand equivalent to 63 GW of new data centre capacity worldwide.

The juxtaposition of these two figures leads to a shocking conclusion: in order to meet the US operators’ projections, the US would have to absorb more than 90% of the entire new global supply of AI chips produced worldwide between 2025 and 2030. Such a scenario is clearly unrealistic. The US currently accounts for less than 50% of global chip demand, and other regions such as Europe and Asia are also rapidly developing their AI capabilities. The conclusion is inescapable: the forecasts on which US energy expansion plans are based are fundamentally unreliable and disconnected from the physical realities of global production.

Fragile foundations: geopolitics, technology and talent

The contradiction exposed by the LEI report is deeply rooted in the extremely complex and disruption-prone structure of the global semiconductor supply chain.

Firstly, geopolitics. Global manufacturing is dominated by just a few countries and companies. At the centre of this ecosystem is Taiwan, specifically TSMC, which controls more than 90% of the market for the most advanced chips. The entire digital economy is de facto hostage to political stability across the Taiwan Strait. The second pillar is South Korea, with Samsung and SK Hynix dominating the memory market, including HBM memory, which is crucial for AI. This concentrated landscape has become an arena for a technology war between the US and China, leading to market fragmentation and supply chain disruption.

Secondly, technology. A key bottleneck is advanced packaging. Chip-on-Wafer-on-Substrate (CoWoS) technology, dominated by TSMC, is essential for integrating multiple chiplets into a single AI super processor. CoWoS production capacity, despite dynamic expansion, has not kept pace with exploding demand, which is a major factor limiting the supply of the most powerful accelerators.

Thirdly, people. Even if geopolitical and technological barriers could be overcome, a lack of skilled human resources stands in the way. It is estimated that by 2030, the industry will be short of around 67,000 professionals in the US alone. Globally, this gap could reach hundreds of thousands. New factories being built under initiatives such as the CHIPS Act could be left empty due to a lack of engineers and technicians to operate them.

Avoiding collision: efficiency and innovation

Faced with an inevitable collision, the technology industry must make a strategic turnaround. The way out of the impasse is through two paths: radical efficiency improvements and the search for new computing paradigms.

In the short term, efficiency is key. The concept of ‘Green AI’ involves designing models with a view to minimising their energy footprint. Techniques such as model optimisation (e.g. pruning, quantisation) can significantly reduce computing power requirements. Infrastructure efficiency is equally important. Switching from air cooling to much more efficient liquid cooling can reduce energy consumption in a data centre by up to 40%. However, it is important to bear in mind the so-called rebound effect (Jevons paradox), where improvements in efficiency can lead to even higher resource consumption.

In the long term, a technological breakthrough is needed. The two most promising technologies are photonics and neuromorphic computing. Photonic computing, using light instead of electrons, offers theoretically orders of magnitude higher throughput and lower energy consumption. Neuromorphic computing, on the other hand, inspired by the architecture of the human brain, is inherently more energy efficient for AI tasks. However, be realistic – these technologies will not be ready for mass deployment before the end of the decade.

Moving beyond the impossible race

The analysis leads to a clear conclusion: “the ‘impossible race to the chip’ is not a distant threat, but a present reality. The current growth trajectory is unsustainable. Constraints are systemic – geopolitical tensions, technological bottlenecks and a global talent gap create a web of interconnected barriers.

A change in thinking is required in this situation. The real victory in this race will not be to build the biggest and most energy-intensive supercomputer. The victory will be to develop a fundamentally new, sustainable development path. The future belongs not to those who will be able to power the most monstrous AI models, but to those who will figure out how to achieve the same or better results with radically lower energy and resource consumption. This is no longer a race for raw computing power. It’s a race for intelligence – both the artificial intelligence we create and the human intelligence we must use to manage this transformation responsibly. Moving beyond the ‘impossible race’ requires moving from a paradigm of ‘more’ to a paradigm of ‘smarter’. This is the greatest challenge and also the greatest opportunity facing the technology industry in the coming decade.