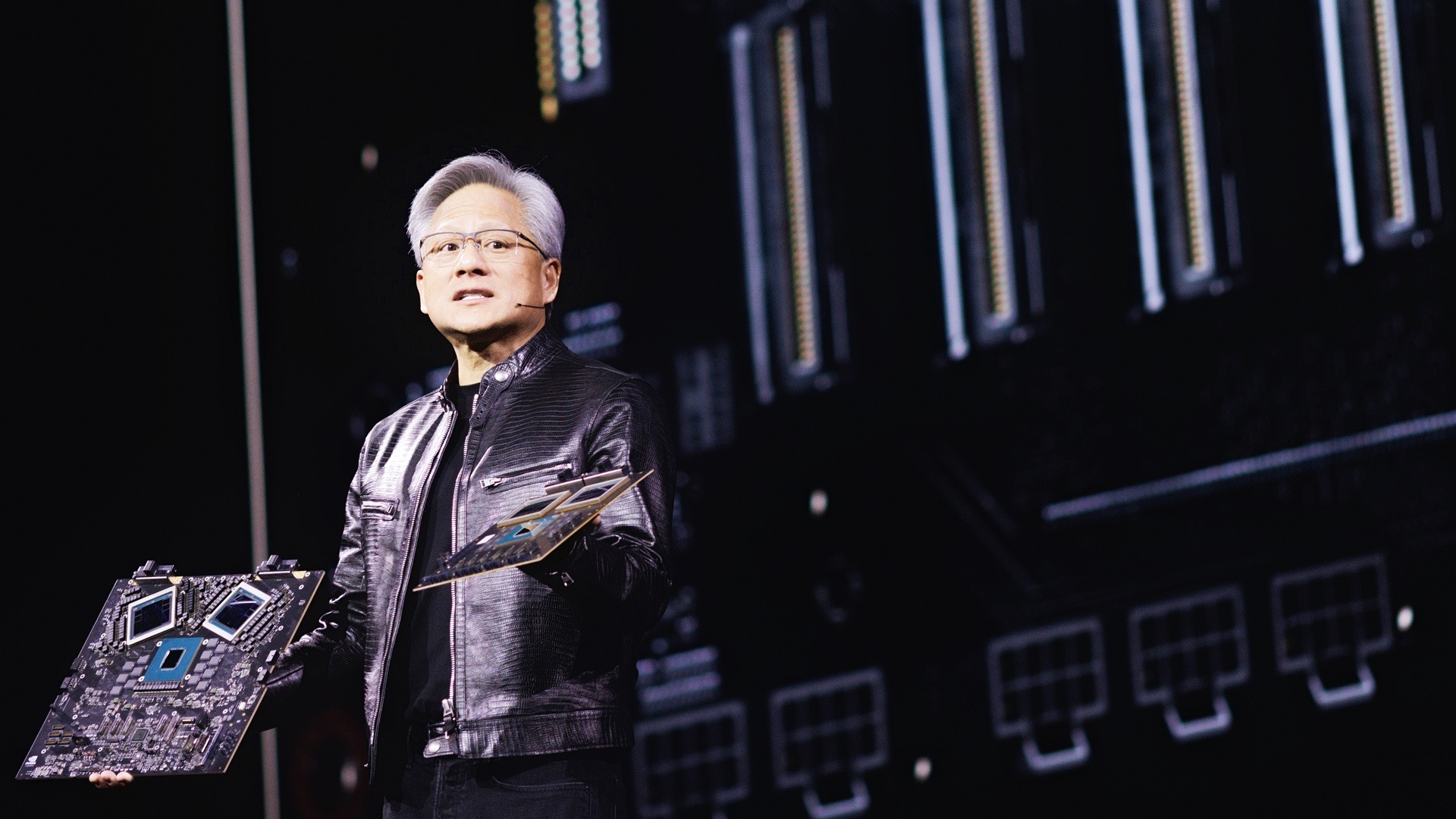

While Silicon Valley is racing to become a leader in language models, a much riskier game is underway on the military technology front. The REAIM summit in A Coruña, Spain, aimed at developing standards for the responsible deployment of artificial intelligence in the military, ended in a telling stalemate. Only a third of the participating countries chose to sign a declaration of principles, with the biggest players, the US and China, among the absentees.

For defence and technology executives, the message is clear: despite the rhetoric about security, battlefield pragmatism is winning out over diplomacy. Only 35 out of 85 countries have endorsed the set of 20 principles, which include the need for human oversight of autonomous weapons and transparency in chains of command. Although European powers and South Korea have become signatories, the lack of support from Washington and Beijing reduces these arrangements to the role of theoretical postulates.

Dutch Defence Minister Ruben Brekelmans aptly diagnosed the situation as a classic ‘prisoner’s dilemma’. States face a choice: impose ethical constraints on themselves at the risk of falling behind adversaries, or continue to develop unchecked for fear of losing strategic advantage. The rapid progress of Russia and China in autonomising their combat systems is building up pressure that makes even democratic allies hesitant to formalise any barriers.

The current political climate adds another layer of uncertainty. US-European tensions and the unpredictability of the future transatlantic relationship meant that delegates approached binding declarations with great reserve. Even if this year’s document did not have the force of law, the very attempt to outline a concrete policy proved too bold for those who see AI as a decisive asset in the coming decade.

From a business perspective, the lack of global consensus means that the defence AI market will remain the ‘Wild West’. Technology companies developing systems for the military must navigate a regulatory vacuum where ethical standards are shaped by individual government contracts rather than international law. Until the major players recognise that the risks of unintended escalation outweigh the benefits of technological dominance, a united front on military AI will remain only an ambitious project on paper.