The latest AI processors from NVIDIA, the heart of a technological revolution, are so powerful that they generate a problem that eludes traditional solutions: extreme, concentrated heat. The need to efficiently cool server racks such as the NVIDIA GB200 is forcing the entire industry into a fundamental shift in which liquid cooling ceases to be a niche curiosity and becomes the new, unavoidable standard. This physics-enforced transformation is creating a billion-dollar market for specialist suppliers from scratch and forever changing the way the digital factories of the future – data centres – are designed and built.

Physics forces change

For decades, data centres have relied on a ubiquitous and cheap medium – air. Powerful air-conditioning systems and rows of fans pushed cool air through corridors and server racks, taking heat away from running components. This model worked flawlessly until the density of computing power reached a critical point. Modern AI chips, such as those in NVIDIA’ s Blackwell platform, pack an unprecedented number of transistors into a small footprint, generating hundreds of watts of heat per single processor. In integrated racks such as the GB200, where dozens of such chips run side-by-side, traditional air cooling simply becomes inefficient – unable to pick up the heat quickly enough, leading to overheating, performance degradation and risk of failure.

It is no longer a matter of choice or optimisation. As TrendForce analysts note, the adoption rate of liquid cooling solutions for high-performance AI chips is steadily and rapidly increasing. We are moving from an era where liquid cooling was the domain of enthusiasts and niche supercomputers, to an era where it is becoming the standard configuration for any serious AI deployment.

Birth of a new supply chain

This technological compulsion has opened the door to a whole new ecosystem of companies whose products have suddenly become critical to the operation of the world’s most powerful computing systems. At the centre of this revolution are components that only a few years ago only specialists had heard of:

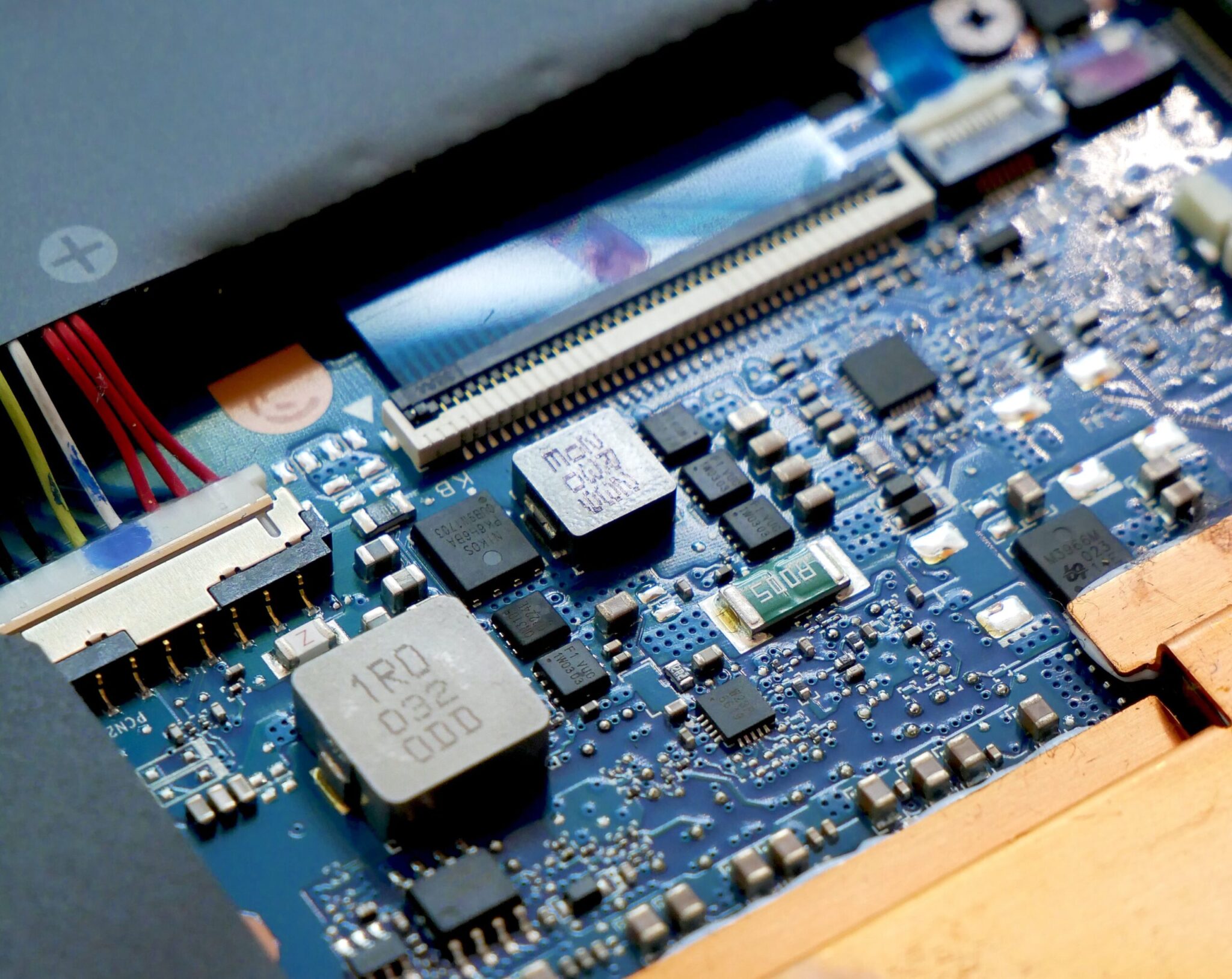

- Cold plates: Precision-made blocks of metal with internal channels, mounted directly on the processors, through which coolant flows, picking up heat at its very source.

- Collectors (manifolds): Systems of tubes and valves that distribute coolant to individual components inside the server, ensuring an even flow.

- Quick Disconnects (QD) connectors: Advanced connectors that allow quick connection and disconnection of fluid lines without the risk of leakage, which is crucial for servicing and scalability of systems.

Leaders are already emerging in this hot new market. Fositek, with its parent company AVC, is positioning itself as a key beneficiary of this wave. Working closely with NVIDIA, the company is supplying key QD connectors designed specifically for the flagship GB300 platform, which work perfectly with cooling boards from AVC. That’s not all, however. Fositek has also begun mass producing components for systems based on custom ASICs from AWS, directly challenging incumbent giants such as Denmark’s Danfoss. This signals that new players are gaining ground at an express pace.

Another dynamic competitor is Auras. The company is building its position on a broad customer base that includes leading server brands such as Oracle, Supermicro and HPE. Auras’ strategic moves, including starting to supply Meta and ambitious plans to enter the supply chain for the GB200 platform, show how fierce the battle is to become a trusted supplier to the cloud giants.

Paradigm shift in data centre construction

The move to liquid cooling is much more than replacing components inside a server. It is a fundamental change in the way the entire data centre infrastructure is designed, built and managed. Deploying this system on a large scale requires complex plumbing, including dedicated piping at rack and facility-wide level, powerful coolant distribution units (CDUs) and external cooling towers that dissipate heat to the atmosphere.

As a result, a new design standard has been born in the industry: ‘liquid-cooling ready’ data centres. New facilities are being planned from the ground up with this technology in mind. This affects their architecture, room layout, power systems and, of course, the investment budget. Although the initial costs are higher, the long-term benefits cannot be overestimated. Liquid cooling offers significantly higher thermal efficiency, which allows for denser packing of hardware and easier scaling of computing power in the future, and can lead to significant energy savings compared to energy-intensive air-conditioning systems.

Value shifts towards infrastructure

The AI revolution is shifting the centre of gravity in the hardware value chain. It is no longer just the silicon itself – the processors and accelerators – that determines the strength and value of a system. Equally critical is becoming the advanced infrastructure that enables its stable and efficient operation. Thermal management has risen from a secondary technical issue to become one of the key pillars of modern computing power.