Hewlett Packard Enterprise unveiled the next generation of its supercomputing solutions yesterday (13 November), making a clear strategic bet. In an era of resource-intensive AI models that

redefine data centres, HPE is unifying its HPE Cray architecture to meet both new workloads and traditional scientific simulation (HPC). This is a direct response to growing demand from research labs, government agencies and enterprises that no longer want to maintain separate, costly silos for both worlds.

The company has announced that the HPE Cray Supercomputing GX5000 platform, introduced in October, has already won key customers. The German supercomputing centres, HLRS in Stuttgart (the ‘Herder’ system) and LRZ in Bavaria (the ‘Blue Lion’ system), have chosen it for their next-generation machines. Their motivations are clear: they need a platform that seamlessly combines simulation with AI and is extremely energy-efficient at the same time. Prof. Dieter Kranzlmüller from the LRZ emphasises that direct liquid cooling (up to 40°C) will allow the campus to reuse waste heat.

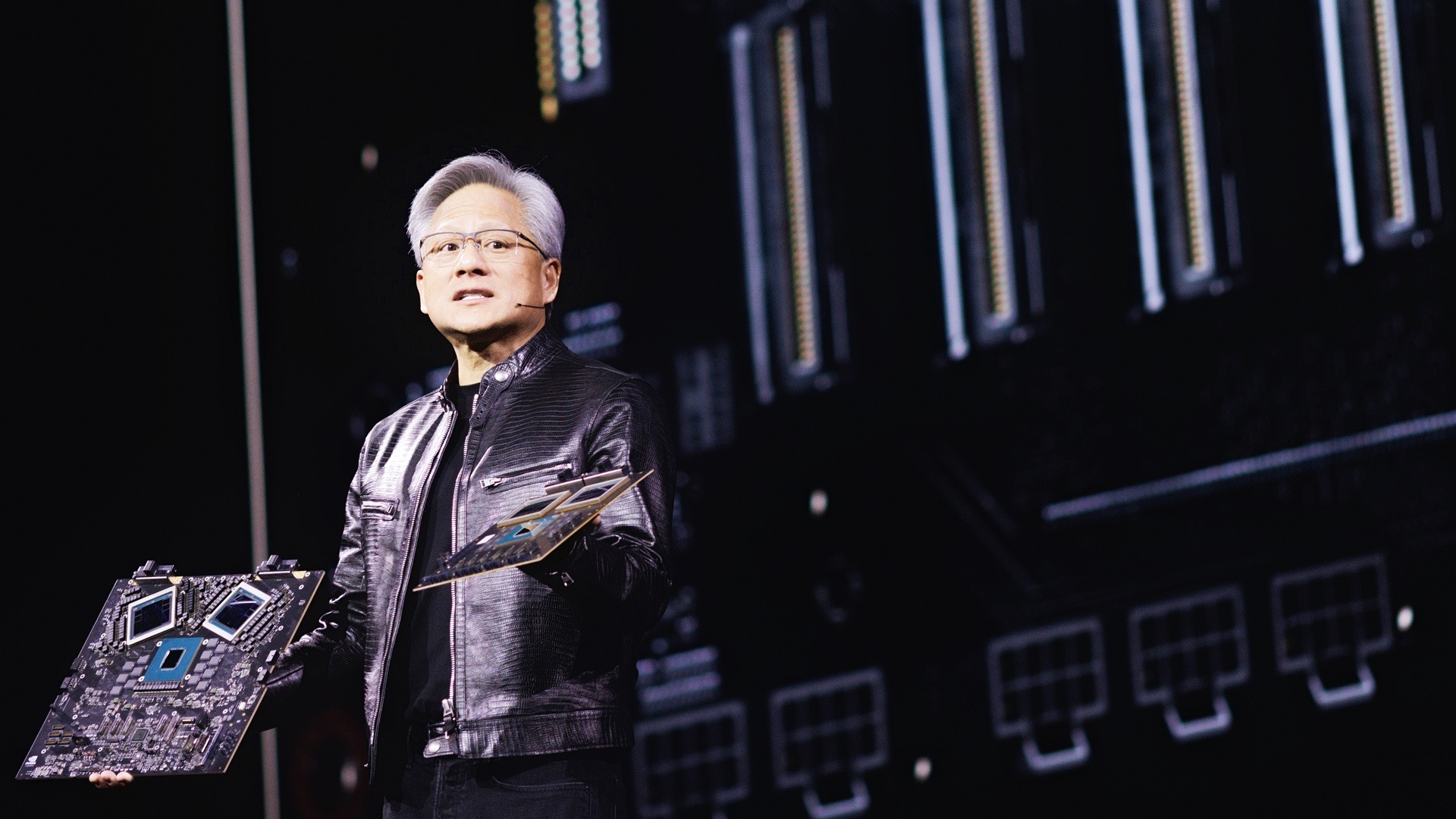

At the core of Thursday’s announcement are three new liquid-cooled compute modules. HPE is betting on flexibility and partnership here, offering configurations based on both the next generation of NVIDIA Ruby GPUs and Vera CPUs (in the GX440n module), as well as competing AMD Instinct MI430X accelerators and ‘Venice’ EPYC processors (in the GX350a and GX250 modules). Compute density and full liquid cooling are key, to address the growing energy challenges.

The supercomputer is not just about computing power, however. HPE is upgrading the entire platform. The HPE Slingshot 400 network is expected to provide the 400 Gbps throughput needed to scale AI jobs across thousands of GPUs. New HPE Cray K3000 storage systems, based on ProLiant servers and open-source DAOS (Distributed Asynchronous Object Storage) software, are in turn expected to address data access bottlenecks, which is critical for AI models.

The whole is tied together by updated HPE Supercomputing Management Software, emphasising management of multi-tenant environments, virtualisation and detailed control of energy consumption across the system.

While the announcement is strategically significant and secures HPE’s position in future multi-year contracts, IT market analysts must be patient. Most of the unveiled compute modules (with Ruby and MI430X chips) and software updates will not be available until “early 2027”. Slightly earlier, “early 2026”, the K3000 storage is expected to arrive. This is a clear indication that yesterday’s announcement is primarily a roadmap presentation and a response to competitors’ plans, rather than a launch of products that companies can order in the coming quarters.