At CES, Nvidia officially confirmed what had been talked about behind the scenes for months: the Blackwell era already has a successor. Jensen Huang unveiled the Ruby platform, which is set to define the artificial intelligence infrastructure for the second half of 2026.

The new architecture is not only another performance leap, but above all a clear signal to competitors that Nvidia intends to maintain its position as hegemon in hyperscalers data centres.

At the heart of the new ecosystem is the Ruby GPU, which is expected to offer up to five times the performance of its predecessor in inference tasks. The chip features 336 billion transistors, which translates into 50 petaflops of computing power in NVFP4 format.

For training AI models, the increase is slightly more conservative, although still impressive – 35 petaflops represents a 2.5-fold acceleration over the Blackwell architecture.

A key change in Nvidia’s strategy, however, is the increasingly tight integration of CPU and GPU. The Ruby is accompanied by the Vera CPU, based on ARM architecture and featuring 88 proprietary Olympus cores.

The combination of the two chips forms the Vera Rubin superchip, named after the famous American astronomer. This tandem is set to replace the popular Grace Hopper solution, offering a new quality of communication with the sixth-generation NVLink interconnect.

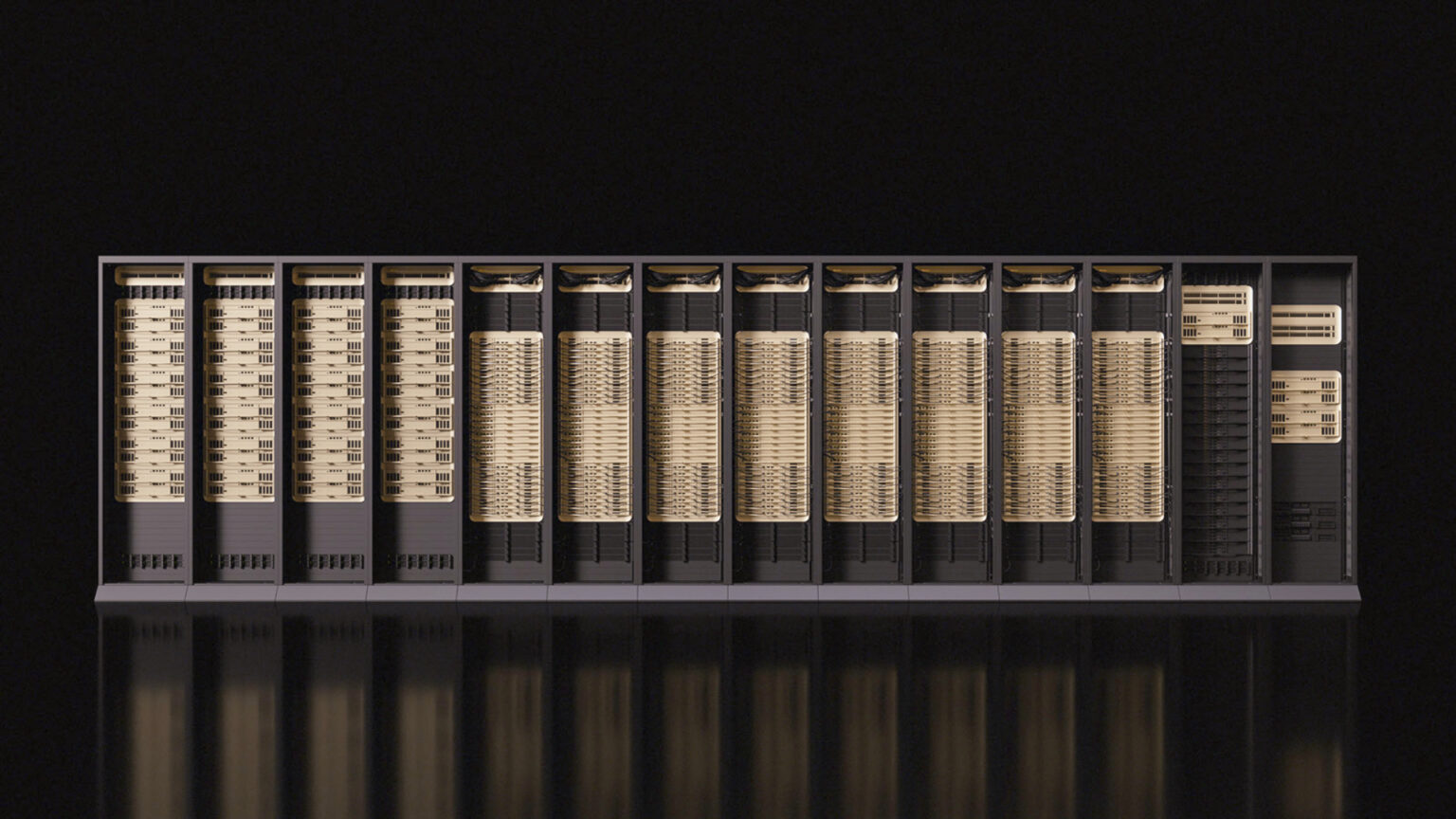

Nvidia is clearly aiming to sell complete systems, not just components. The flagship product is becoming the Vera Rubin NVL72, a fully integrated liquid-cooled server rack that combines accelerators, processors and the new ConnectX-9 and BlueField-4 DPU networking chips into one cohesive AI-HPC organism.

For customers who prefer x86 architecture, there is a smaller DGX Rubin NVL8 system, pairing eight Rubin GPUs with Intel Xeon 6 chips.

The economics of this solution are expected to convince major players. Nvidia declares that the cost per inference token will drop tenfold compared to the current generation. This is a key argument for OpenAI, Microsoft, Google or AWS, which are already queuing up for the new hardware. The first systems based on the Ruby platform are expected to reach partners in the second half of this year, with full cloud availability expected in the following months, cementing Nvidia’s dominance in the generative AI era.