In January 2024, an employee in the finance department at multinational engineering firm Arup received an email that appeared to be from the chief financial officer (CFO) at its UK headquarters. The email informed of a secret transaction and included an invitation to a video conference. The employee, although initially suspicious, joined the call. On the other side of the screen, he saw not only the CFO, but also several other board members he knew. Their appearance and voices were perfectly reproduced. Convinced of the authenticity of the meeting, over the next few days he authorised 15 transfers totalling $25.6 million. Only after the fact did he discover that he had been the victim of one of the most audacious frauds in history. All the participants in the video conference were digital clones, generated by artificial intelligence.

This incident is not a sci-fi movie scenario, but the brutal reality of a new era of cyber threats. Welcome to the world of Phishing 2.0 – an evolution of phishing that, thanks to artificial intelligence, machine learning and deepfake technology, has become more sophisticated, personalised and dangerous than ever before. Traditional attacks, which we have learned over the years to recognise by grammatical errors and generic phrases, are becoming a thing of the past. In their place are campaigns that are almost indistinguishable from authentic communication, precisely targeting specific individuals and capable of bypassing traditional defences.

Artificial intelligence is no longer just a tool that improves phishing; it is fundamentally redefining it. It democratises access to advanced attack techniques that were once the domain of only specialised hacking groups, and fuels an arms race in cyberspace. In this new reality, both attackers and defenders are engaged in a battle of algorithms, with data, finance and trust at stake as the foundation of the digital economy.

| Feature | Phishing 1.0 (Before the era of AI) | Phishing 2.0 (AI-supported) |

|---|---|---|

| Language and grammar | Frequent errors, unnatural wording. | Perfect grammar, imitating the writing style of specific individuals. |

| Personalisation | General phrases such as “Dear Customer”. | Hyper-personalisation using social media data and public records. |

| Scale and speed | Manual, resource-limited campaigns. | Automated generation of thousands of unique messages in minutes. |

| Attack vectors | Mainly email. | Multichannel: email, SMS (smishing), voice calls (vishing), social media. |

| Avoidance tactics | Simple domain impersonation. | Dynamic page cloning, code obfuscation by AI, deepfake audio and video. |

| Required skills | Basic technical knowledge. | Low entry threshold with AI tools and Phishing-as-a-Service (PhaaS) platforms. |

Anatomy of a Phishing 2.0 attack: An AI-driven arsenal

The modern phishing attack is a complex, multi-step process in which artificial intelligence plays a key role at every step. At the core of Phishing 2.0 are large language models (LLMs) such as GPT-4, as well as their uncensored, darknet-accessible counterparts such as WormGPT or FraudGPT. These tools have become an inexhaustible source of perfectly written, psychologically compelling content for cybercriminals. They eliminate grammatical errors, mimic the communication style of specific individuals and can create persuasive narratives from just a few simple commands.

The effectiveness of Phishing 2.0 is based on hyper-personalisation, and this depends on the quality of the data collected. Artificial intelligence has automated the reconnaissance (OSINT) process, systematically searching the digital footprint of potential victims. AI algorithms aggregate information from social media, corporate websites and public records to learn about the victim’s interests, professional relationships and recent activities. The collected data – the name of a project, a supervisor’s name or a recent holiday – is woven into the content of the message, making the scam appear extremely authentic.

Artificial intelligence has also enabled the mass production and distribution of attacks. ‘Phishing-as-a-Service’ (PhaaS) platforms have emerged, such as ‘SpamGPT’, which mimic the interface of legitimate marketing services but serve a criminal purpose. They offer an integrated AI assistant for generating templates, automating mailings and tracking analytics, allowing even those with few technical skills to conduct sophisticated large-scale operations.

One of the biggest challenges is Phishing 2.0’s ability to bypass traditional security filters. AI is used here to create dynamic threats. AI tools can create perfect, real-time updated replicas of legitimate login pages. Analysts at Microsoft Threat Intelligence identified a campaign where AI was used to hide malicious code inside an image file, masking it using business terminology to confuse scanners. Criminals are also abusing trusted developer platforms to host fake sites with CAPTCHA verification, which blocks automated scanners but lets the victim through to the phishing site.

The integration of AI with phishing is the industrialisation of cybercrime. We are seeing a shift from an ‘artisanal’ to an ‘industrial’ model. AI has become a production line that automates the entire attack process on a scale previously unattainable.

The human element under siege: Deepfake and psychological manipulation

The most worrying front in the evolution of phishing is the use of AI to create hyper-realistic voice and image imitations. Deepfake technology is striking a blow to trust in one’s senses. It only takes a few seconds of audio material to create a convincing voice imitation. Attackers use this technology in voice messages or in real-time phone calls (vishing).

Analysis of actual incidents shows the devastating potential of this technology. In the case of Arup, an employee who initially suspected phishing was completely convinced after a video conference with digital clones of the board of directors. In another attack, the CEO of a UK energy company authorised a transfer for $243,000 after a phone call with a cloned voice of his superior.

However, there are also examples of foiled attempts that provide valuable lessons. An attack on Ferrari was stopped when a manager asked the supposed CEO a follow-up question about a recent private conversation, which the AI was unable to answer. At Wiz, the deception attempt failed because employees noticed a subtle difference between the CEO’s voice from public appearances (on which AI was trained) and his tone in everyday conversations. In contrast, a LastPass employee ignored an attempted contact from the supposed CEO because it was through an unusual channel (WhatsApp) and outside standard working hours.

These cases reveal a fundamental weakness of deepfake technology: the ‘contextual gap’. AI can replicate patterns, but it cannot replicate authentic, shared human experience. It does not know the content of private conversations or the subtle nuances of interactions. This gap is a new battleground on which the ‘human firewall’ can claim victory.

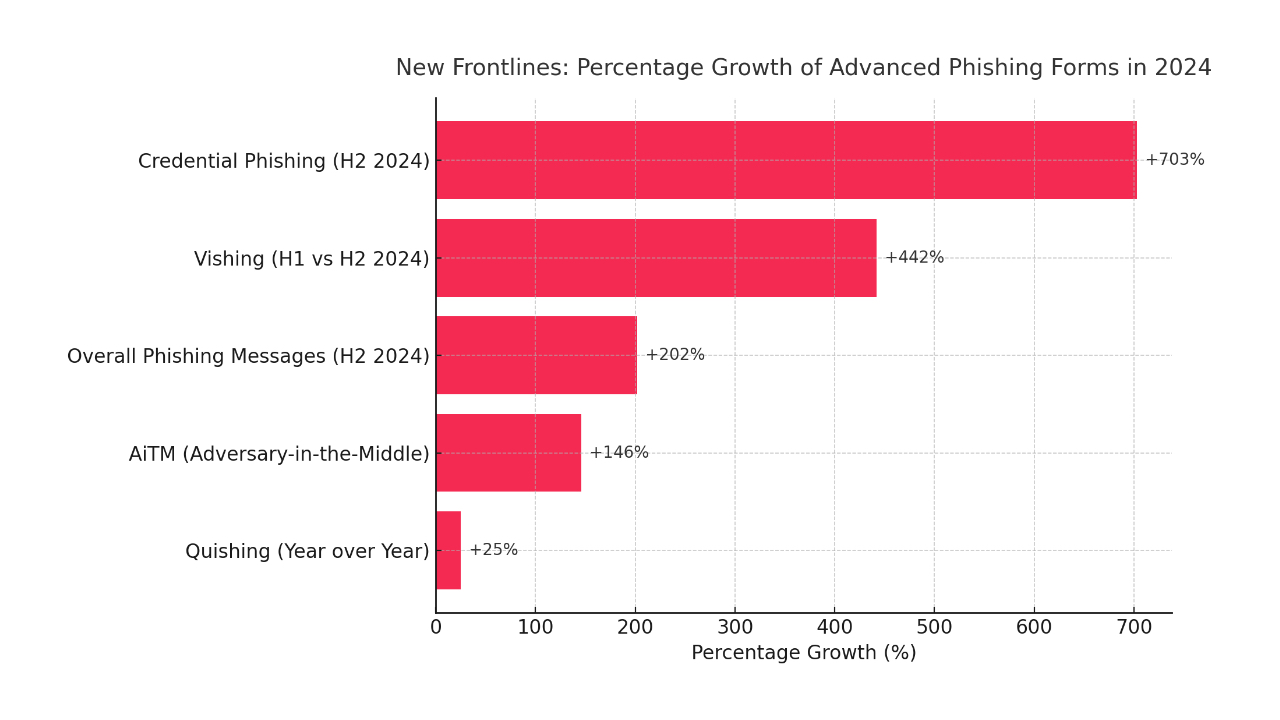

The data behind the threat: Quantification of impact

The scale of the transformation is reflected in hard data. Reports indicate a 1,265% increase in phishing emails, directly linking it to the uptake of GenAI technology. Total phishing volume has increased by 4,151% since ChatGPT’s debut.

The increase in the number of attacks translates into increasing financial losses. The average cost of a data breach whose vector was phishing reached $4.8 million in 2024. Losses from Business Email Compromise (BEC) attacks reached a record $2.9 billion.

What’s more, an experiment conducted by Hoxhunt found that in March 2025, an AI agent became 24% more effective at creating phishing campaigns than an elite human team of experts. This proves that artificial intelligence is becoming objectively better at manipulating humans.

Although the overall volume of attacks is increasing, a strategic shift is also being observed. Attackers are increasingly moving away from mass campaigns to precisely targeted operations on high-profile departments such as finance or HR. Invariably, Microsoft remains the most commonly impersonated brand, used in more than 51.7% of scams.

Fighting fire with fire: AI-driven defence

In response to threats, the cyber security industry has also reached out to AI, creating a new generation of intelligent defences. Unlike traditional filters, defensive AI is adaptive and contextual. Its operation is based on behavioural analysis, creating a dynamic profile of normal communication patterns and detecting anomalies such as a sudden change of tone in an email from a known sender. Natural language processing (NLP) tools analyse the content of messages for subtle signals of manipulation.

Artificial intelligence is also revolutionising the work of security operations teams (SOCs) by automating log analysis and alert classification, allowing human analysts to focus on the most complex incidents. Interestingly, the same large language models used for phishing are also proving effective in detecting it.

This evolution is forcing a fundamental change of philosophy in cyber security. We are seeing a shift from a ‘state’ based model (is this element known to be bad?) to a ‘behaviour’ based model (is this element behaving strangely?). The new model, driven by AI, is not so much interested in ‘what it is’ as in ‘how it works’.

Building a resilient organisation: A multi-layered strategy

Effective defence requires an integrated approach that combines technology, processes and informed people. Traditional training is no longer sufficient. The new programme must prepare employees to confront deepfakes. Implementing out-of-band verification protocols for every sensitive request – confirming an email with a phone call to a known number – becomes crucial. The Ferrari example also demonstrates the power of simple security questions based on a shared, private context.

Technology must provide a solid foundation. The Zero Trust philosophy (‘never trust, always verify’) is becoming a fundamental defence strategy. Phishing-resistant multi-factor authentication (MFA), based on FIDO2 standards (e.g. dongles) that bind the authentication process to a physical token, rendering a stolen password useless, is also essential.

Forecasts from analysts such as Gartner indicate a shift in the allocation of budgets. By 2030, more than half of spending will be on preventative measures rather than post-incident response. This is an acknowledgement that traditional models are too slow to combat attacks at the speed of AI.

The most effective defence mechanisms are no longer purely technical; they are integrated into business processes. The failure at Arup was a process failure – the financial procedure lacked a mandatory, non-digital verification step. The Ferrari success, on the other hand, was a process success. The solution requires a change in the way work is done. IT leaders must become business process engineers, building verification steps directly into high-risk workflows.

Navigating the future of digital deception

Phishing 2.0, driven by AI, is not a hypothetical threat but a current reality. It is more personalised, persuasive and operates on an industrial scale. Deepfake technology has undermined our fundamental trust in sensory evidence.

We are facing a new era where AI has democratised advanced attacks and defences must be equally intelligent. Looking to the future, experts predict a further escalation of this arms race. There is talk of the emergence of autonomous, multi-agent AI systems (‘swarms of agents’) that will conduct complex operations on both the attack and defence side. The UK’s National Cyber Security Centre (NCSC) predicts that AI will continue to reduce the time between vulnerability disclosure and exploitation.

Resilience is a hybrid of smart technology and an equally smart, sceptical workforce. The ultimate defence is a holistic strategy that combines the predictive power of AI with the contextual wisdom of a well-trained, procedurally-acting human team. The fight against digital deception has reached a new level, and our ability to adapt will determine the outcome.