It is accepted that the worst failures are those caused by DDoS attacks or catastrophic errors in business logic. However, the events of 18 November 2025 at Cloudflare reminded us of a much more insidious enemy: routines that awaken dormant errors.

Anyone who manages distributed systems is familiar with this scenario: everything works as planned, tests pass green and deployment seems a formality. And yet, moments later the dashboards are glowing red. This analysis of an incident that affected one of the key web services not only chronicles the events, but is above all a fascinating case study for SRE and DevOps engineers. It shifts the focus of the discussion from “how to fix” to the much more difficult question “how do you detect something that theoretically doesn’t exist?”.

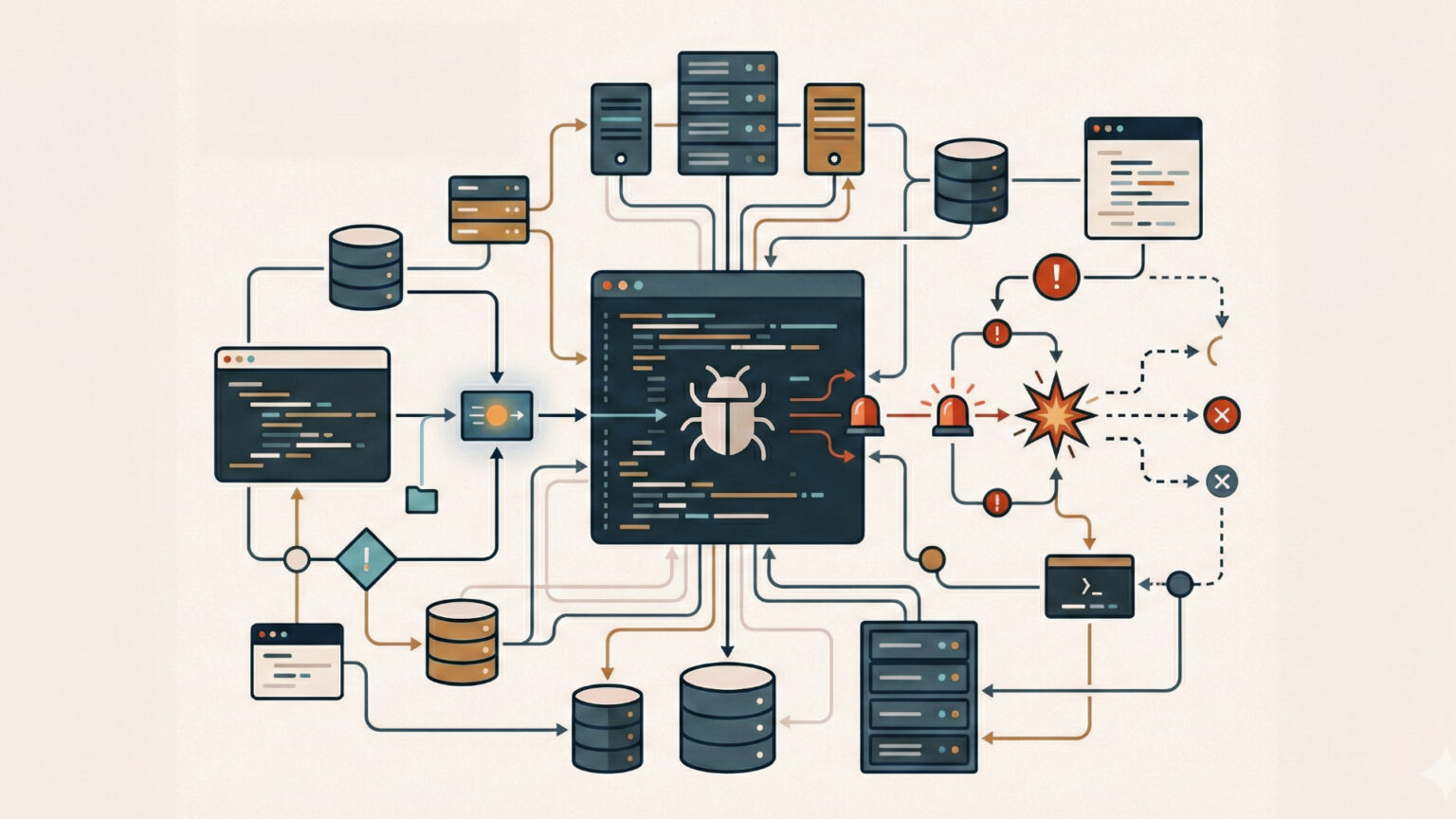

Latent Bug in the Code

Experts analysing this case draw attention to the concept of a ‘latent bug’. This is a piece of code that is normally completely harmless. It sleeps, waiting for a specific, rare combination of events.

In the case in question, the mechanism was almost textbook. On the one hand, we had a hard limit in the Rust code (a maximum $200 function in the configuration), designed as a performance optimisation. On the other, a routine change to the ClickHouse database that unexpectedly returned duplicate metadata. The result? The configuration file swelled twice, exceeding a limit that the system had ‘forgotten’ existed because it had never been tested under boundary conditions before.

This leads to system panic (the famous `unwrap()` on error) and cascading failure. The lesson is brutal: performance optimisation that is not protected by resilience logic becomes a technical debt.

Observability is not just about error logs

The lessons learned from this incident are redefining the approach to monitoring. Traditional waiting for HTTP 500 codes is not enough. As reliability professionals rightly point out, proactivity based on saturation metrics is the key.

Here is what engineers should implement ‘yesterday’ to avoid similar scenarios:

Monitoring of ‘hard’ limits: If the system has a limit sewn in (e.g. the size of the buffer or the number of entries), the monitoring must alert when we are approaching 80% of the limit, and not only when the limit is exceeded. This is a classic use of one of the “Four Golden Signals” (Saturation).

Correlation of deployments with anomalies: The failure was a direct result of the change. Modern observability systems need to automatically tie an application ‘panic’ to the last event in the CI/CD pipeline. This reduces the MTTI (Mean Time To Identify) time from hours to minutes.

Canary checks on data structure: Synthetic tests should not just check that the service ‘gets up’, but that the data it generates (e.g. configuration files) is within security standards before it is globally propagated.

Architecture of distrust

Analysis of this case leads to another fundamental architectural conclusion: don’t trust your own configurations.

We often treat user input as potentially dangerous (SQL Injection, XSS), but we consider configuration files generated by our own systems to be safe. This is a mistake. The Input Hardening approach suggests that internal configurations should be validated with the same rigour as external data. If the system had checked the size of the file before attempting to process it, it would have ended up rejecting the update, not global paralysis.

It is also worth refreshing your knowledge of the Bulkhead Pattern. The isolation of processes and thread pools ensures that the failure of one component (in this case the bot management module) does not melt the whole ship.

The November 2025 incident is proof that, on a macro level, small mistakes do not exist. Only bugs that have not yet found their trigger exist. It is a signal to the IT industry to stop relying solely on functional testing and start designing systems that are ready for the ‘impossible’. True resilience is not the absence of bugs, but the ability to survive their activation.