The distillation of artificial intelligence models has been making a dizzying comeback in the IT community over the past few months. On the one hand, it is a response to the increasing cost and energy intensity of large-scale language models; on the other, it opens up a whole new field of regulatory and ethical disputes.

The technique, which involves transferring knowledge from huge teacher models to much smaller and cheaper student models, raises questions about intellectual property, data rights and the extent of innovation across the industry.

It is worth looking at why this issue is becoming so important right now. The development of large models, such as GPT or BERT, entails gigantic financial and infrastructural investments.

Distillation appears to be an attractive alternative – it allows the size of models to be reduced by up to 100 times, while retaining much of their capability. For business, it is an opportunity to lower barriers to entry, but at the same time a source of serious regulatory concerns.

If the smaller model is in fact a copy of the knowledge of the large one, can its creator dispose of it freely? Does the distillation not infringe the copyright or business secrets of the owner of the base model?

This is where the problem begins. Technically, the distillation process does not involve copying code or source data, but rather ‘teaching’ the new model through the older one. In practice, however, the end result can be very similar, and the line between original and copy becomes blurred.

Imagine a situation where a commercial model – available under licence – is used as a tutor for a free open source model. The resulting learner runs faster, cheaper and without licence restrictions.

Is this still legitimate inspiration or already an infringement of intellectual property?

The consequences for the market can be severe. For the major technology players, who invest hundreds of millions of dollars in model development, distillation means risking a loss of competitive advantage.

If someone is able to replicate the knowledge of their models and make it widely available, investments in original solutions lose some of their value. On the other hand, smaller companies finally gain access to advanced technology without having to maintain server farms and large research teams.

In this sense, distillation democratises AI, but this comes at the expense of legal certainty. Unclear regulations can lead to litigation, and these litigations can slow down innovation.

Legal aspects are only one side of the coin. Ethical issues are equally important. Student models inherit not only knowledge, but also the mistakes and biases of their teachers. If the base model contains a bias against certain groups in society, this will be passed on, but already without the full control and responsibility of the original creator.

Added to this is the problem of transparency: users do not always know that they are using a system developed from another, possibly commercial model. Finally, there is the risk of transferring sensitive data – if personal data was included in the process of training the teacher model, its ‘footprints’ may also be reflected in the student model.

What can be done about it? Experts point out that international standards are needed to protect intellectual property in AI. Distillation requires clear licensing rules – who can use the model as a teacher and under what conditions.

One proposed solution is the introduction of rights registration technology on the blockchain, which would enable the pedigree of each model and its links to others to be tracked. Another approach is compliance audits and tagging mechanisms, which would indicate on what basis a model originated.

Such solutions could become part of regulatory packages such as the EU Artificial Intelligence Act.

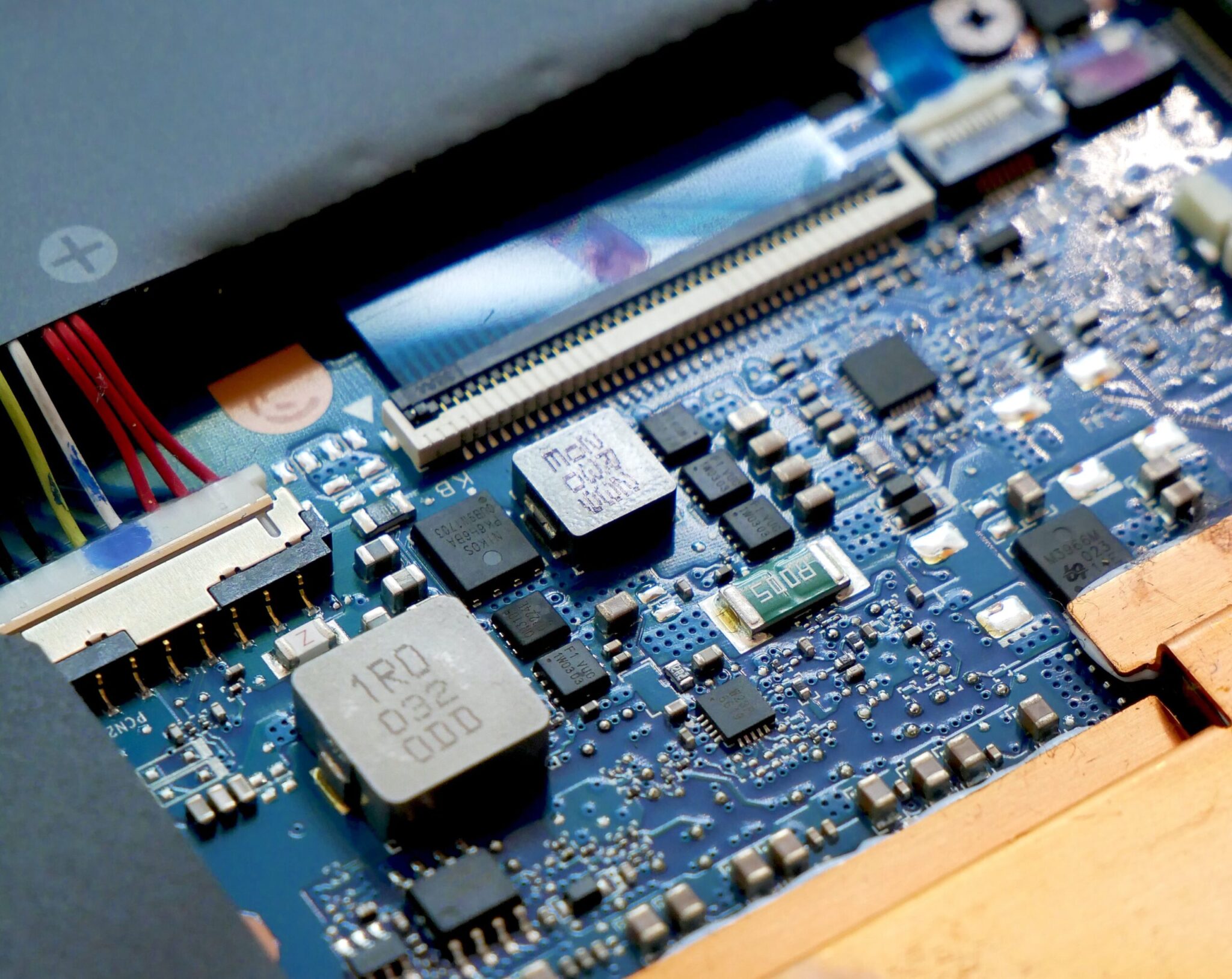

However, the outlook is still uncertain. On the one hand, distillation has the potential to become the cornerstone of mass AI adoption – allowing deployments in mobile devices, IoT systems and resource-constrained environments.

On the other hand, there are still no clear rules defining the limits of legality. Different geographical approaches further complicate the picture. In the United States, there is more emphasis on the free market and the protection of innovation, in Europe on regulation and data security, and in China on the control of strategic technologies. Which of these models proves dominant will determine the global shape of the industry.

In the background, another question remains: whether an excessive focus on distillation will limit the field for true innovation. If the market focuses mainly on copying and reducing existing solutions, the impetus for completely new architectures and breakthrough concepts may be missing.

The balance between efficiency and creativity will be one of the most important challenges in the coming years.

Model distillation is thus a technique with enormous potential, but at the same time a technology that balances on the boundaries of law and ethics. It gives smaller companies a tool to compete with the giants, but in doing so it undermines the existing principles of intellectual property protection.

It can accelerate AI deployments on a massive scale, but if not clearly regulated, it risks chaos and legal uncertainty.

The IT industry today faces a choice: treat distillation as a natural step in the evolution of artificial intelligence and find a regulatory framework for it, or risk it becoming a field for abuse and conflict.

The final shape of this debate will determine whether distillation will be seen as a technology to drive growth or as a destabilising factor in the AI market.