The growing demand for computing power for generative artificial intelligence is forcing a fundamental change in data centre design. Traditional approaches are becoming insufficient in the face of systems scaling to gigawatts. In response to these challenges, Vertiv, a company specialising in critical infrastructure, is strengthening its collaboration with NVIDIA. The company has introduced gigawatt-scale reference architectures developed for the NVIDIA Omniverse DSX Blueprint design patterns.

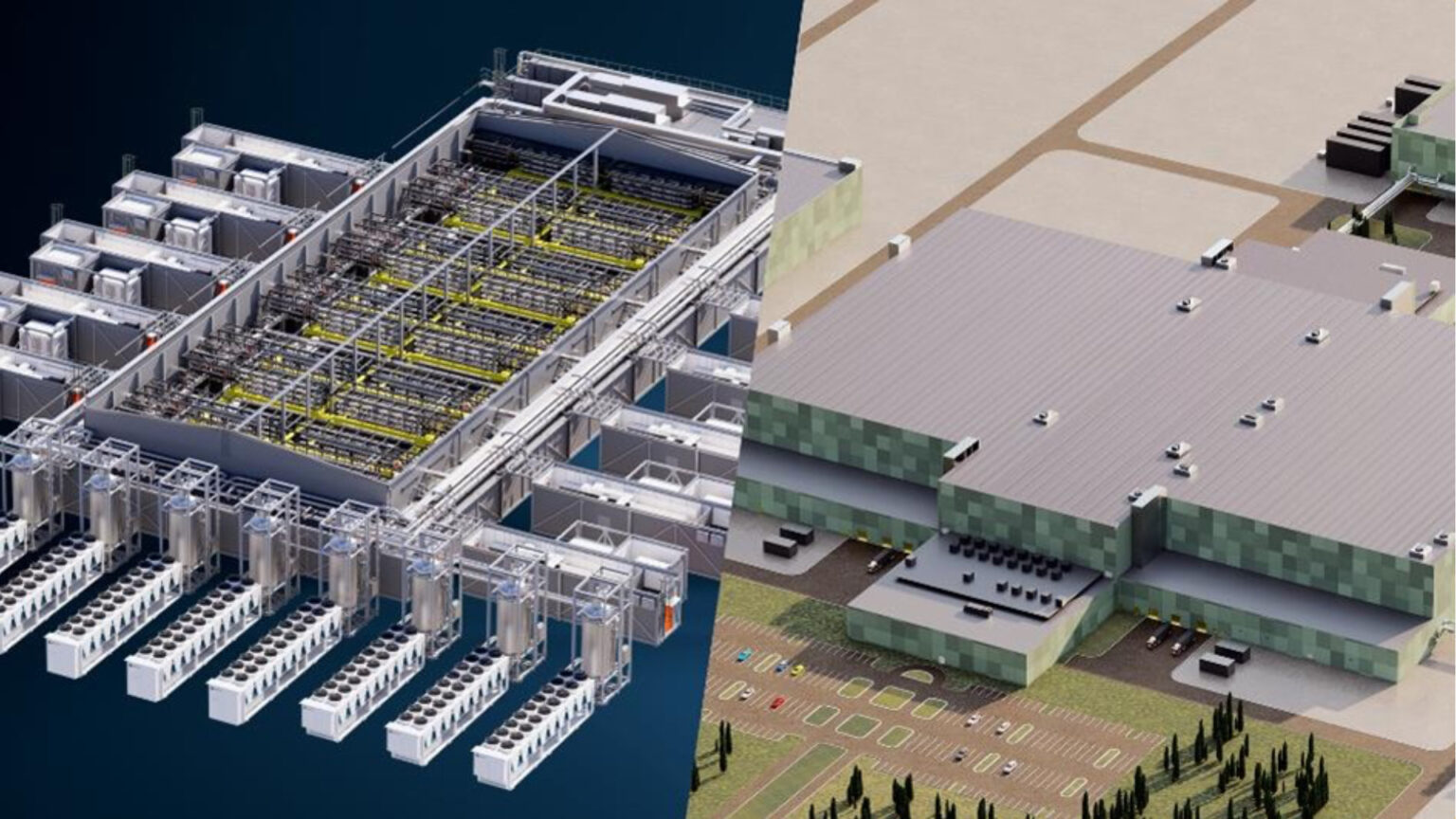

The aim is to drastically reduce the time it takes to get large-scale AI systems such as those based on NVIDIA’s Vera Rubin architecture up and running, which the industry refers to as ‘time to first token’. The key is supposed to be standardisation and flexibility. The new Vertiv architectures move away from a one-size-fits-all model. Instead, they offer customers three validated deployment paths: a traditional on-premise build, a hybrid model and fully prefabricated AI factories.

The latter option in particular, based on the Vertiv OneCore platform, is intended to respond to market time pressures. The platform treats the entire facility as a single, jointly designed and integrated system, including computing power, power and cooling. Vertiv estimates that this approach can reduce project lead times by up to 50% compared to traditional construction methods, while optimising space utilisation.

Another element accelerating the process is deeper integration with digital twins. Vertiv has made its SimReady 3D assets available in NVIDIA Omniverse libraries. This allows engineers to simulate and optimise the entire infrastructure – from optimised grid-to-chip power topologies to advanced liquid cooling systems – even before physical construction begins. This minimises risk and allows future upgrades to be tested.

These architectures integrate Vertiv’s liquid cooling techniques, designed to handle the extreme thermal requirements of accelerated computing systems, including an end-to-end heat recovery chain.

The partnership is part of NVIDIA’s strategy to build next-generation ‘AI factories’, which was confirmed by Dion Harris of NVIDIA, who spoke of the need for a “robust ecosystem of partners”. Vertiv’s Scott Armul added that “the AI-driven industrial revolution requires a complete redesign of infrastructure”. Both companies signal that the AI era requires not only more powerful chips, but more importantly a fundamentally new approach to the infrastructure that powers them.