At North Carolina State University, robotic arms precisely mix chemicals while streams of data flow through systems in real time. This ‘self-powered laboratory’, an AI-powered platform, discovers new materials for clean energy and electronics not in years, but days.

Collecting data 10 times faster than traditional methods, it observes chemical reactions like a full-length film rather than a single snapshot. This is not science fiction; it is the new reality of scientific discovery.

This incredible leap is being driven by a new kind of computing engine: specialised AI accelerator chips. These are the ‘silicon brains’ of the revolution. Moore’s law, the old paradigm of doubling computing power in general-purpose systems, has given way to a new law of exponential progress, driven by massive parallel processing.

The crux of the story, however, is more complex. While AI algorithms are the software of a new scientific era, the physical hardware – the AI chips – has become the fundamental enabler of progress and, paradoxically, also its biggest bottleneck.

The ability to discover a new life-saving drug or design a more efficient solar cell is today inextricably linked to a hyper-competitive, multi-billion dollar corporate arms race and a fragile geopolitical landscape in which access to these chips is a tool of global power.

Anatomy of a boom: who is building silicon brains?

The boom in generative artificial intelligence has created an insatiable demand for computing power. It’s not just chatbots, but foundational models that underpin a new wave of scientific research. This demand has transformed a niche market into a global battlefield for dominance.

Reigning champion: NVIDIA

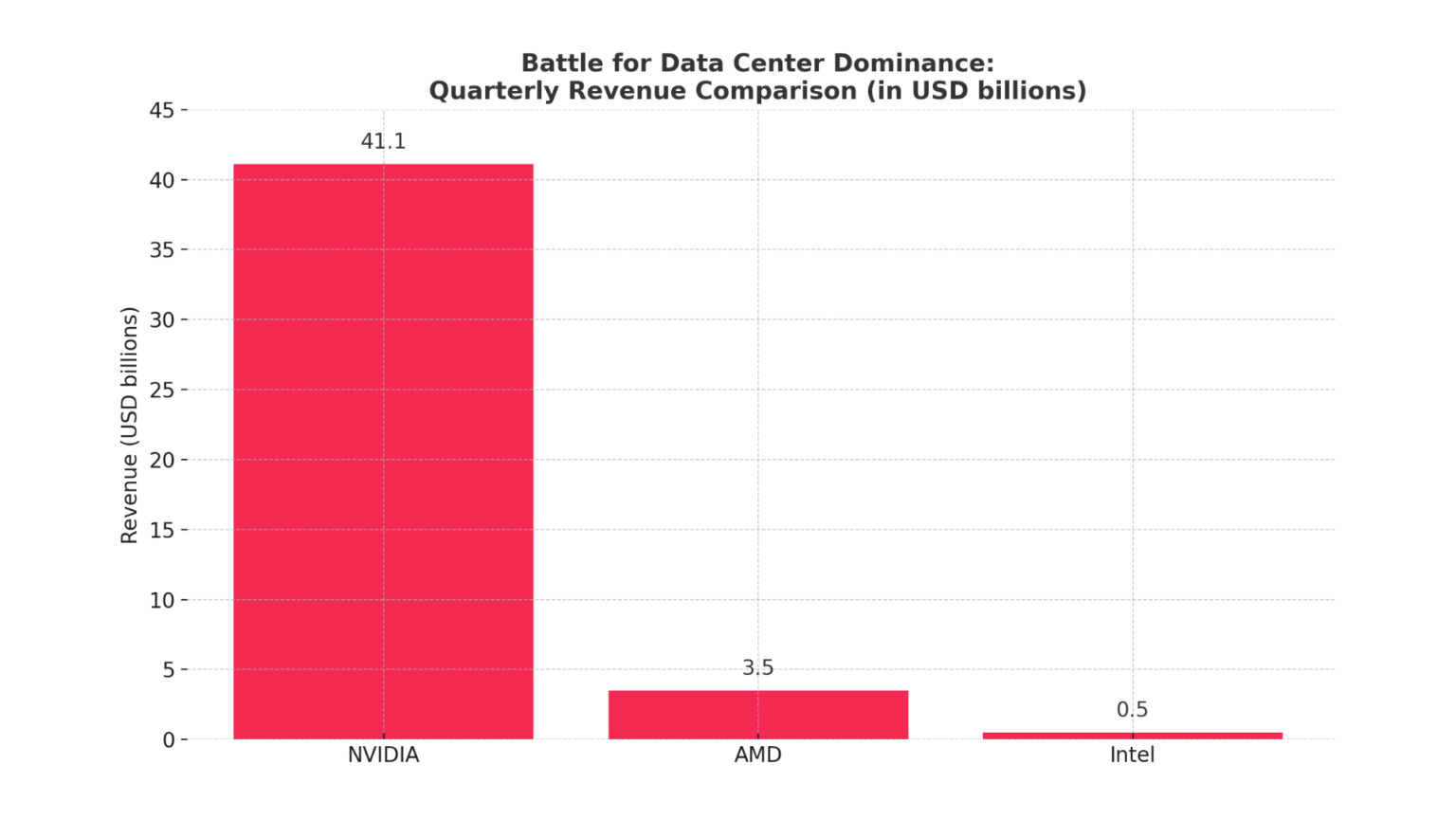

NVIDIA has established itself as a key architect of the AI revolution, as evidenced by its stunning financial results. The data centre division, the heart of the company’s AI business, reported revenues of $41.1bn in a single quarter, up 56% year-on-year.

This dominance is based on successive generations of powerful architectures such as Hopper and now Blackwell, which are core hardware for technology giants such as Microsoft, Meta and OpenAI .

An energetic contender: AMD

AMD is positioning itself not as a distant number two, but as a serious and fast-growing competitor. The company reported record data centre revenue of US$3.5bn in Q3 2024, a massive 122% year-on-year increase, driven by strong adoption of its Instinct series GPU accelerators.

Significantly, major cloud service providers and companies such as Microsoft and Meta are actively deploying MI300X accelerators from AMD, signalling a desire to have a viable alternative to NVIDIA. The company forecasts that its data centre GPU revenue will exceed US$5bn in 2024.

The gambit of the historical giant: Intel

Intel’s situation presents a strategic challenge. Although the company claims that its Gaudi 3 accelerators offer a better price/performance ratio compared to NVIDIA’s H100 , it is struggling to gain market share.

Intel missed its $500m revenue target for Gaudi in 2024, citing slower-than-expected adoption due to issues with transitioning between product generations and, crucially, challenges with ‘ease of use of the software’.

Analysis of this data reveals deeper trends. Firstly, the AI hardware market is not just a race for components, but a war of platforms. Intel’s difficulties with software point to the real battlefield: the ecosystem. NVIDIA’s CUDA platform has more than a decade’s head start, creating a deep ‘moat’ of developer tools, libraries and expertise.

Competitors are not just selling silicon; they need to convince the whole world of science and development to learn a new programming language. Secondly, the AI boom is leading to vertical integration of the data centre.

Not only does NVIDIA dominate the GPU market, but following its acquisition of networking company Mellanox in 2020, it has also become the leader in Ethernet switches, recording sales growth of 7.5x year-on-year.

NVIDIA is no longer just selling chips; it is selling a complete, optimised ‘AI factory’ design, creating an even stronger lock-in effect.

From lab to reality: scientific breakthroughs powered by silicon

This unprecedented computing power is fueling a revolution in the way we do research, leading to breakthroughs that seemed impossible just a few years ago.

The medicine of tomorrow

The traditional drug discovery process, which takes 10 to 15 years, is being dramatically shortened. DeepMind CEO Demis Hassabis predicts that AI will reduce this time to “a matter of months”.

Isomorphic Labs, a subsidiary of DeepMind, is using AI to model complex biological systems and predict drug-protein interactions. Researchers at Virginia Tech have developed an AI tool called ProRNA3D-single that creates 3D models of protein-RNA interactions – key to understanding viruses and neurological diseases such as Alzheimer’s.

Moreover, a new tool from Harvard, PDGrapher, goes beyond the ‘one target, one drug’ model. It uses a graph neural network to map the entire complex system of a diseased cell and predicts combinations of therapies that can restore it to health.

High-resolution climate

In the past, accurate climate modelling required a supercomputer. Today, AI models such as NeuralGCM from Google can run on a single laptop . This model, trained on decades of weather data, helped predict the arrival of the monsoon in India months in advance, providing key forecasts to 38 million farmers.

A new AI model from the University of Washington is able to simulate 1,000 years of Earth’s climate in just one day on a single processor – a task that would take a supercomputer 90 days.

Companies like Google DeepMind (WeatherNext), NVIDIA (Earth-2) and universities like Cambridge (Aardvark Weather) are building fully AI-driven systems that are faster, more efficient and often more accurate than traditional models.

Alchemy of the 21st century

As mentioned at the outset, AI is creating autonomous labs that accelerate materials discovery by a factor of ten or more. The paradigm shifts from searching existing materials to generating entirely new ones.

AI models, such as MatterGen from Microsoft, can design new inorganic materials with desired properties from scratch. This ability to ‘reverse engineer’, where scientists identify a need and AI proposes a solution, has been the holy grail of materials science.

These examples illustrate a fundamental change in the scientific method itself. The computer has ceased to be merely a tool for analysis; it has become an active participant in the generation of hypotheses. The role of the scientist is evolving into a curator of powerful generative systems.

This accelerates the discovery cycle exponentially and allows scientists to explore a much larger ‘problem space’ than was ever possible for humans.

Geopolitical storm and a new division of the world

As the importance of these silicon brains grows, they are becoming the most valuable strategic resource of the 21st century – the new oil, crucial for economic competitiveness and scientific leadership.

US strategy: “small garden, high fence”

The US has implemented a ‘small garden, high fence’ strategy, introducing export controls aimed at slowing China’s ability to develop advanced AI. These restrictions apply not only to the chips themselves (such as NVIDIA’s H100), but also to the hardware required to manufacture them (from companies such as the Dutch ASML).

This hit the Chinese semiconductor industry in the short term, causing equipment shortages and ‘crippling’ its production capacity.

China’s determined response

China’s response has been multi-pronged: massive investment in its domestic semiconductor industry and the use of its own economic leverage by restricting exports of key rare earth elements. The case study is Huawei.

Despite being crippled by sanctions, the company has developed its own line of AI Ascend chips (910B/C/D), which are now seen as a viable alternative to NVIDA products in China.

In response, the US government has toughened its stance, declaring that the use of these chips anywhere in the world violates US export controls, escalating the technological divide.

A study by Oxford University reveals a harsh reality: advanced GPUs are heavily concentrated in just a few countries, mainly in the US and China. The US leads the way in access to state-of-the-art chips, while much of the world is in ‘computing deserts’.

This situation leads to unintended consequences. US export controls, designed to slow China down, have become an ‘inadvertent accelerator of innovation’ for China, forcing Beijing to build a completely independent technology stack.

A decade from now, the world may have two completely separate, incompatible AI stacks, fundamentally dividing global research.

The cloud as the great equivalent?

There is a powerful counter-argument: cloud computing democratises access to elite AI. Platforms such as Amazon Web Services (AWS), Microsoft Azure and Google Cloud offer AI-as-a-Service (AIaaS), allowing a university or startup to rent the same powerful GPUs that OpenAI uses.

The cloud giants offer rich ecosystems. AWS provides services such as SageMaker for building models and Bedrock for access to leading foundation models. Google Cloud promotes democratisation with tools such as Vertex AI, designed for minimal complexity.

Microsoft Azure is tightly integrating AI into its ecosystem through Azure AI Foundry, offering access to more than 1,700 models and running dedicated ‘AI for Science’ research labs.

However, the promise of access must be set against the harsh reality of cost. Training a state-of-the-art model is prohibitively expensive, with estimates as high as USD 78 million for GPT-4 and USD 191 million for Gemini Ultra. This leads to a ‘two-tier democracy’ in AI research.

On the one hand, any researcher with a grant can access world-class AI tools. This is a democratisation of application . On the other hand, the ability to train a new large-scale foundational model from scratch remains the exclusive domain of a handful of actors: the cloud providers themselves and their key partners.

This is the centralisation of creation. The cloud ‘democratises’ AI in the same way that a public library democratises access to books. Anyone can read them, but only a few have the resources to write and publish them.

A future written in silicon

The breathtaking pace of scientific discovery in medicine, climatology and materials science is a direct consequence of the massive industrial and geopolitical mobilisation around a single technology: the AI accelerator.

Progress has become fragile and deeply interdependent. Scientific breakthrough is no longer just a function of a brilliant mind. It now also depends on the quarterly financial reports of NVIDIA and AMD, the trade policies enacted in Washington and Beijing, the stability of the supply chain passing through Taiwan and the pricing models of AWS, Google and Microsoft.

We have entered an era where the future is literally written in silicon. The great challenges of our time – curing disease, fighting climate change, creating a sustainable future – will be solved with these new tools.

But who will be able to wield them, and for what purpose, remains the most important and unresolved question of the 21st century. The next great scientific revolution will be televised live, but the rights to broadcast it are currently being negotiated in the boardrooms of corporations and the corridors of global power.