The technology industry, after two years of fascination with Generative AI, is entering the ‘check out’ stage. Enthusiasm is colliding with hard reality. Statistics indicating low levels of AI adoption in many economies are bringing us down to earth.

This year’s Dell Technologies Forum in Warsaw was a good example of this. As Dariusz Piotrowski aptly summarised it, the key dogma nowadays is: ‘AI follows the data, not the other way around’. It is no longer the algorithms that are the bottleneck. The real challenge for business is access to clean, secure and well-structured data. The discussion has definitely moved from the lab to the operational back office.

AI follows the data

We have been living under the belief that the key to revolution is a more perfect algorithm. This myth is just now collapsing. However, internal case studies of major technology companies show: implementing an internal AI tool is often not a problem of the model itself, but months of painstaking work to… organising and providing access to distributed data.

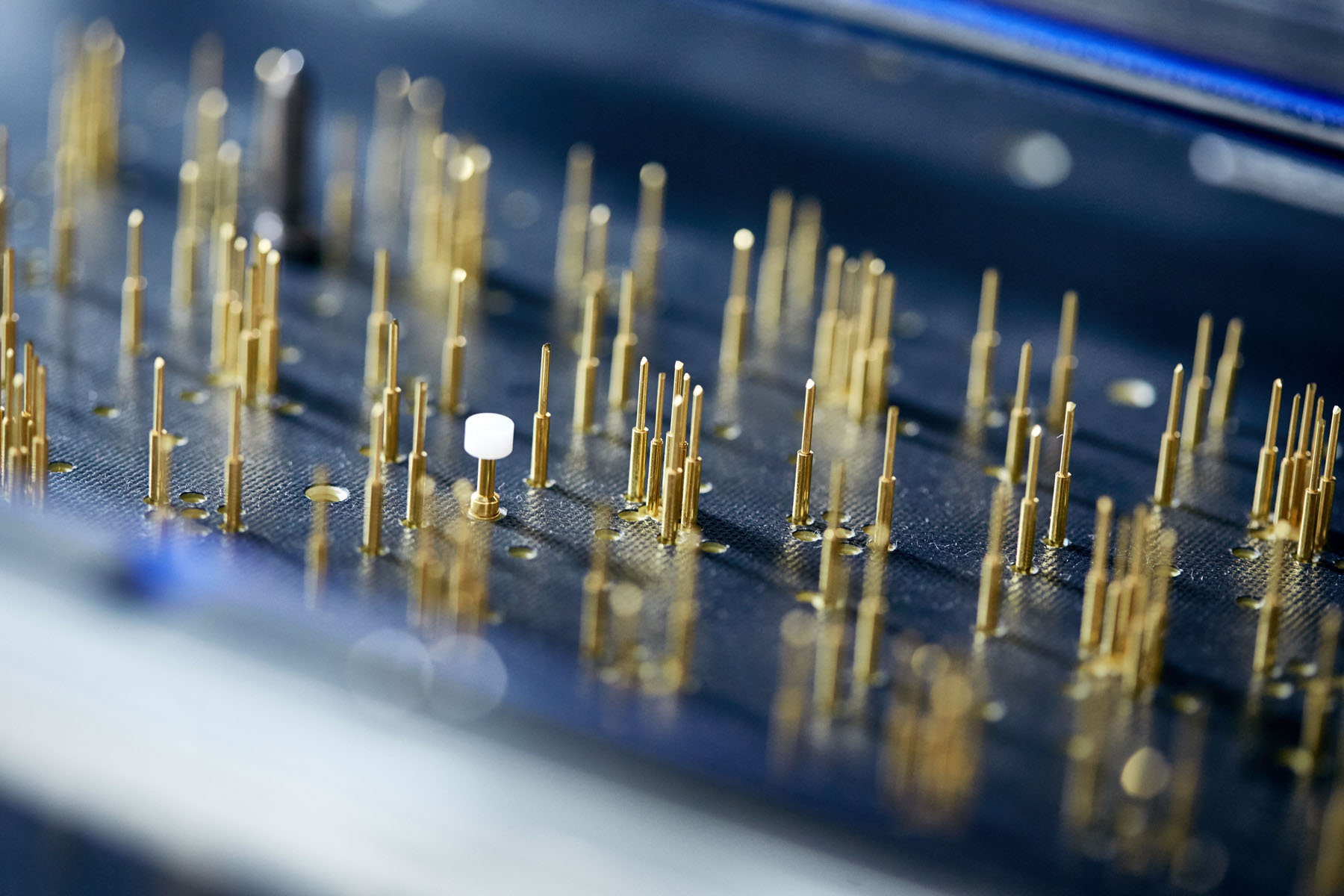

This raises an immediate consequence: computing power must move to where the data originates. Instead of sending terabytes of information to a central cloud, AI must start operating ‘at the edge’ (Edge AI).

The most visible manifestation of this trend is the birth of the AI PC era. With dedicated processors (NPUs), PCs are expected to locally handle AI tasks. This is not a marketing gimmick, but a fundamental change in architecture. It’s all about security and privacy – key data no longer needs to leave the desk to be processed. Of course, this puzzle won’t work without hard foundations. Since data is so critical, the cyber security landscape is changing. The number one target of attack is no longer production systems, but backup. This is why the concepts of ‘digital bunkers’ (restore vaults) – guaranteeing access to ‘uncontaminated’ data – are becoming the absolute foundation of any serious AI strategy.

Pragmatism versus “Agent Washing”

In this red-hot market, how do you distinguish real value from marketing illusion? After the wave of fascination with ‘GenAI’, the new ‘holy grail’ of the industry is becoming ‘AI Agents’. However, we must beware of the phenomenon of “Agent Washing” – the packaging of old algorithms into a shiny new box with a trendy label.

Business is beginning to understand that the chaotic ‘bottom-up’ approach leads nowhere. As Said Akar of Dell Technologies frankly admitted, the company initially put together ‘1,800 use cases’ of AI, which could have become a simple path to paralysis. Therefore, the strategy was changed to a hard ‘top-down’ approach: finding a real business problem, defining a measurable return on investment (ROI) and only then selecting tools.

This leads directly to a global trend: a shift away from the pursuit of a single, giant overall model (AGI) to ‘Narrow AI’. This trend combines with the growing need for digital sovereignty. States and key sectors (such as finance or administration) cannot afford to be dependent on a few global providers. Hence the growing trend of investing in local models that allow for greater control.

Hype versus hallucination

When the dust settles, it turns out that the great technological race is no longer just about making models know more. It’s about making them… make up less often. The biggest technical and business problem remains hallucinations.

The dominant and only viable business model is becoming ‘human-in-the-loop’, i.e. the human at the centre of the process. In regulated industries, no one in their right mind will allow a machine to ‘pull the lever’ on its own. As mBank’s Agnieszka Słomka-Gołębiowska aptly pointed out, financial institutions are in the ‘business of trust’ and the biggest risk of AI is ‘bias’, which cannot be fully controlled in the model itself.

Artificial intelligence is set to become a powerful collaborator to take over the ‘thankless tasks’. But the final, strategic decision is up to humans. The real revolution is pragmatic, happens ‘on the edge’ and is meant to help, not take away, from work.