Today’s digital economy rests on an invisible but fundamental pillar: public key cryptography. Algorithms such as RSA and ECC have become synonymous with digital trust, but this foundation faces an existential threat. The advent of quantum computers capable of breaking current encryption standards is not the next evolutionary step in cyber security; it is a revolution that is forcing technology leaders to fundamentally change their thinking about data protection.

The quantum threat is not a distant, theoretical possibility. It materialises today through a strategy known as ‘Harvest Now, Decrypt Later’ (HNDL), or ‘Harvest Now, Decrypt Later’. Adversaries, including state actors, are actively capturing and storing vast amounts of encrypted data, patiently waiting for the moment when a cryptographically-relevant quantum computer (CRQC) becomes operational. At that point, the data we consider secure today will be retrospectively breached. This fundamentally changes the risk model, shifting the responsibility from purely operational to strategic, related to protecting the long-term value of the company. Any information whose confidentiality lifecycle extends beyond the anticipated moment of CRQC is already at risk.

The arguments for postponing migration to post-quantum cryptography (PQC) have run out of steam. In August 2024, the US National Institute of Standards and Technology (NIST) published the first finalised PQC standards: FIPS 203 (ML-KEM), FIPS 204 (ML-DSA) and FIPS 205 (SLH-DSA). This event sends a key signal to the global market: the research phase is over and the standards are awaiting implementation.

End of the era of “one right encryption”

For the past decades, public key cryptography decisions have been relatively straightforward, mostly boiling down to a choice between RSA and ECC. The post-quantum era puts a definitive end to this paradigm. We are entering a world where there is not and will not be a one-size-fits-all PQC algorithm. Choosing the right solution becomes a conscious strategic decision that must be precisely tailored to the specific use case.

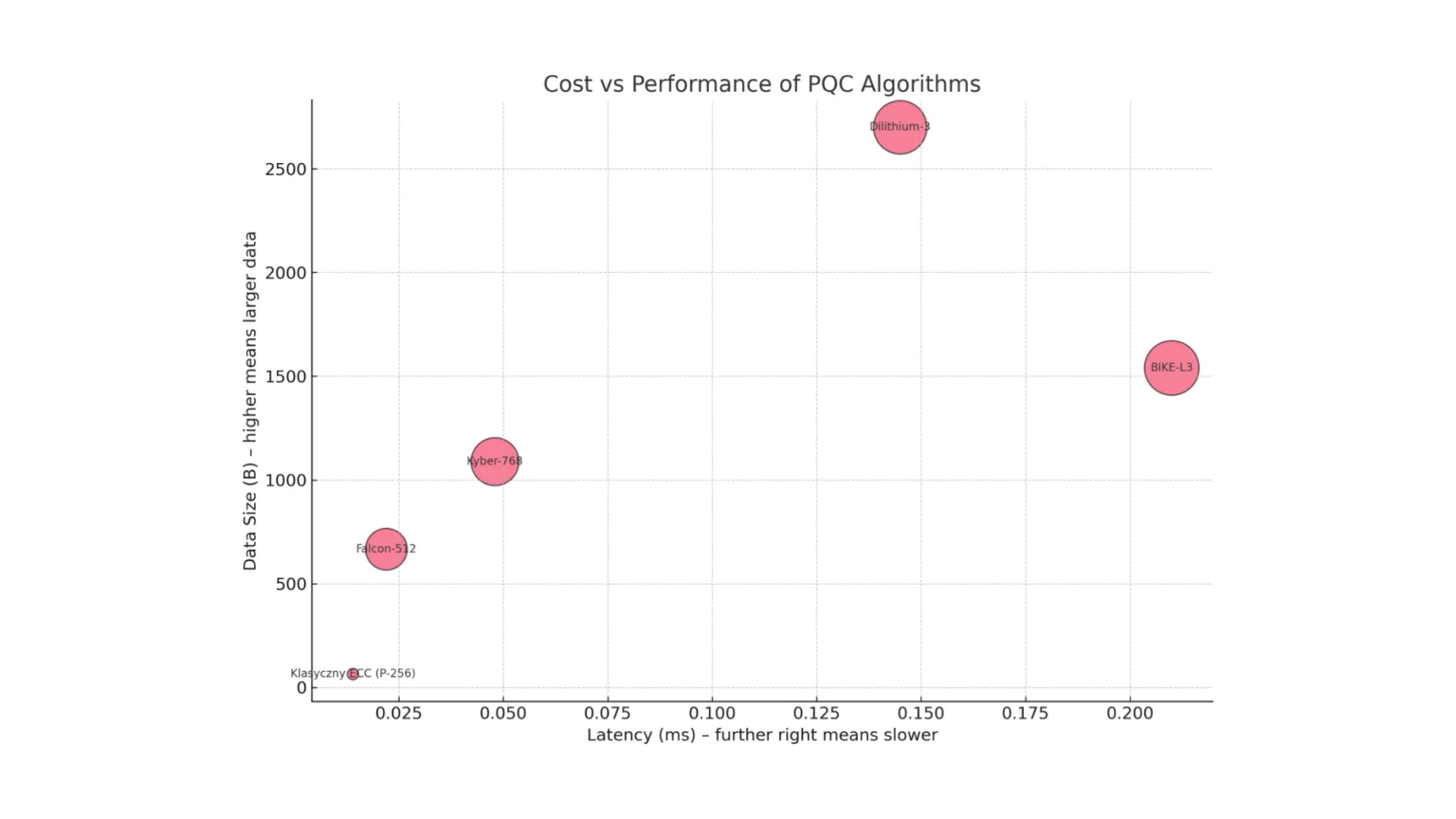

The world of PQC is inherently heterogeneous. NIST deliberately standardises algorithms from different mathematical families because each offers a unique set of trade-offs between computational efficiency, data size, resource usage and security level. Algorithm selection ceases to be solely the domain of cryptographers and becomes an architectural and product decision. Differences in the characteristics of individual algorithms lead to drastically different consequences depending on the deployment scenario.

For high-performance TLS servers supporting, for example, an e-commerce front-end, minimal latency is a priority. Benchmark data clearly shows that CRYSTALS-Kyber (ML-KEM) leads the way, offering negligible performance overhead. In contrast, in VPNs where maximum throughput is crucial, algorithms with very large keys, such as BIKE, can become an issue, causing massive IP packet fragmentation and degrading network performance. In the world of IoT devices, where resources are extremely limited, algorithms with high memory requirements can be completely impractical, directly impacting component cost.

The data speaks for itself

Abstract discussions become real when hard data is analysed. Translating milliseconds of latency and kilobytes of data into concrete business implications is key to making informed decisions. Analysis based on comprehensive benchmarks shows that the choice of PQC algorithm is not only a question of security, but also of real operational costs.

The performance leader is undisputedly CRYSTALS-Kyber, which shows negligible computational overhead in server tests. Its public keys and ciphertexts are compact, occupying just over 2 KB in total, and peak RAM consumption is minimal, making it an ideal candidate for a wide range of applications. At the other extreme is BIKE, a code-based algorithm. Despite its advantages, its implementation comes at a tangible cost: the sum of data exchanged during connection reconciliation exceeds 10 KB, which is more than four times that of Kyber. This size not only increases the cost of data transfer, but above all risks fragmenting network packets.

Even more striking is the example of the Falcon signature algorithm. It offers extremely small signatures, which is a huge advantage in IoT applications where bandwidth is at a premium. However, its peak memory consumption is more than 26 times that of the competing Dilithium. For an engineer designing a medical device, this means that he or she may have to choose a more expensive microcontroller, increasing the unit cost of the overall product.

Hidden risks and recommendations for CISOs

Achieving compliance with PQC standards is just the first step. The biggest risks lie not in the theory of the algorithms, but in their practical implementation. Mathematical robustness is worthless if the physical implementation on the processor ‘leaks’ information about the secret key through side-channel attacks. Research has already shown successful attacks on Kyber and Dilithium implementations using analysis of power consumption or electromagnetic emissions.

A key implication for CISOs is the hidden cost of security. Securing an implementation requires specialised countermeasures, such as masking, which introduce a significant performance overhead. NIST representatives have admitted that a well-secured implementation of Kyber can be up to twice as slow as its baseline version. This means that infrastructure budgets based on benchmarks of unsecured implementations are fundamentally flawed.

Another area of risk is the supply chain. The security of a PQC system is only as strong as its weakest link, and these are often outside the company’s control – in open-source libraries or cloud providers. The CISO needs to start asking vendors tough questions about their roadmap for implementing NIST standards, support for hybrid modes and documented resilience to physical attacks.

The solution to these challenges is crypto-agility – the ability of an architecture to easily and quickly replace cryptographic algorithms without fundamental changes to the infrastructure. In the dynamic world of PQC, where new standards are already emerging, this approach is a recipe for avoiding costly and risky migrations in the future. Investing in a crypto-switching architecture now, as part of the first PQC project, is a strategic decision that will drastically reduce the total cost of security ownership over the coming decade.

Migration to PQC is not a one-off project, but a strategic transformation programme. It should proceed in a methodical and phased manner. The first, fundamental phase is in-depth preparation and inventory. Crucial here is the creation of a detailed cryptographic inventory that maps all systems using vulnerable cryptography. Then, based on a risk analysis, the migration should be prioritised, focusing on assets with the longest required confidentiality lifecycle. The second phase is to build technical capacity, including designing systems for the aforementioned crypto agility and launching controlled pilot projects in hybrid modes. This approach allows the collection of real data on the impact of PQC on the company’s specific environment. The final phase is the actual phased migration of production systems and the establishment of continuous monitoring processes.

The move to post-quantum cryptography is an evolution in technology risk management. The key long-term investment is not in implementing a specific algorithm, but in building a crypto agile architecture. It is this agility that will allow the company to adapt quickly and efficiently to the inevitable changes in the cyber security landscape, ensuring resilience and protecting its future.